No Credit Card Required To Test Drive Our White Label SEO Tool Set

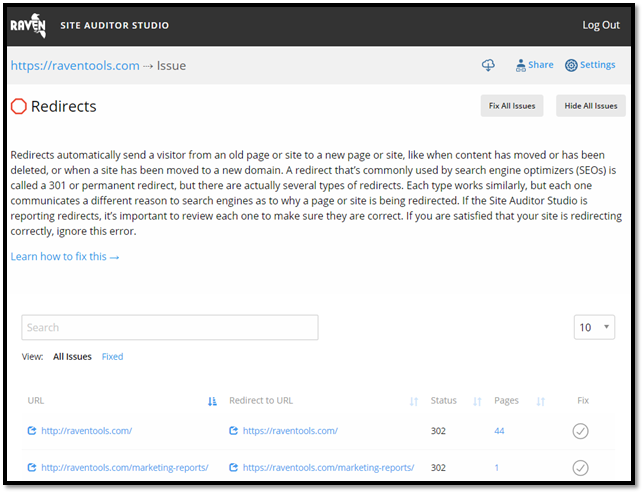

Sometimes you have a reason to move a page, folder, or domain on your website – or remove it from your site altogether.

This is a common practice, but typically results in a “broken” URL. Search engines and users don’t like this because they are then unable to find the content they are looking for. It can also result in a “404” warning – which deters users from staying on your site.

What are you to do in this situation? This is were 301 redirects come in.

Redirects are used for pages, folders and domains that have moved to a different destination on your website. Search engines recommend using 301 redirects for content that has been permanently moved. However, other types of redirects may be appropriate for the changes you're making to your site.

If you change a URL on your website, using a 301 (permanent) redirect is recommended to ensure that users and search engines are sent to the correct page.

301 redirects are helpful for the following situations:

The purpose of a 301 redirect is to ensure that whenever a user or search engine follows a particular URL, they are sent to the right destination.

To set up a 301 redirect, you will need access to your server’s .htaccess file. Then, follow the steps below:

If you have any questions about this process, contact a trusted SEO specialist.

When pages on your website are blocked by your robots.txt file, this means that search engines are not able to crawl these pages.

This can negatively impact your SEO, as these pages will not be visible to users in the search engine results.

It’s important to fix robots.txt file issues so that your important pages have a chance of ranking in search engines.

A robots.txt file is a file that website owners create to permit or restrict access from search engine robots. It is essentially a “Go” or “Stop” sign that tells search engines whether they can crawl your website.

A “pages blocked by robots.txt” error indicates that there are pages on your website that are not accessible to search engine crawlers. These pages will not show up in the search results and will not be able to be found organically by users.

A robots.txt file is used to manage search engine traffic to your website. You may choose to restrict access to certain pages if you don’t want them to be discovered by search engines or users.

For instance, you may want to hide certain landing pages so that users only end up there through a specific marketing campaign, not via organic search. You may also restrict access to unimportant image, style, or script files.

To see if a page has been crawled by Google, search for the page URL in Google.

If you discover that an important page is being blocked by your robots.txt file and you want to unblock it, or if you want to hide a page from search engines, then you will need to update your robots.txt file (or set up a new one).

How you do this will depend on the CMS and host that you use. We recommend searching for instructions on how to modify your robots.txt file for your specific host.

First, make sure that the robots.txt file is writeable by using FTP. If it is not, you may need to change user permissions.

To test your new or updated robots.txt file, submit to Google for it to be crawled.

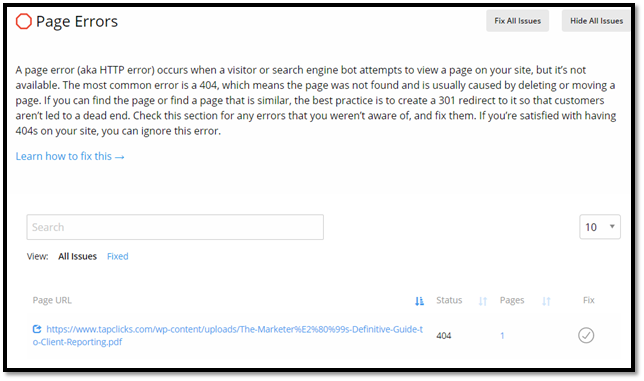

A page server error indicates a problem with your website's hosting provider delivering a page to a search engine robot.

This can be caused by problems with the code on the page or problems with the hosting server.

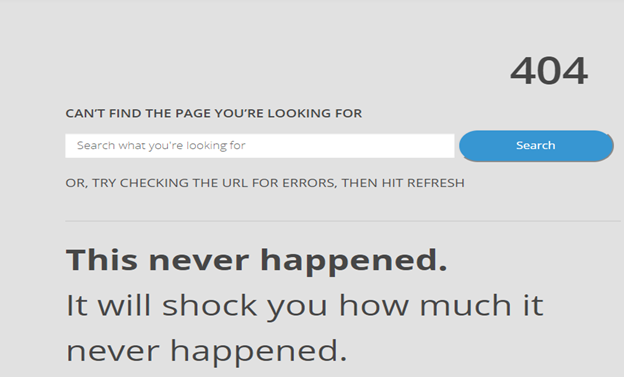

If you encounter a page server error, this will likely be indicated by a “404” error code on the page. This is an HTTP status code that says that the page that you were trying to reach could not be found on the server.

404 errors are bad for SEO because users can encounter these errors after clicking on a page from the search engine results. If users see a 404 or “Not Found” page, they may leave your website thinking that the page no longer exists.

To be clear, this error indicates that the server is reachable, but that the page users are trying to see is not.

You can use crawling tools such as Screaming Frog to find 404 errors on your website. We then suggest setting up 301 redirects to redirect the “broken” URLs to other pages on your website. This helps prevent users from leaving your website.

There can be many reasons why a web page is not accessible or loading properly.

Some common reasons for experiencing page load issues are:

Learn all about 404 errors in our ABCs to 404s Guide.

To address page server errors, first confirm that your server is working properly. If a page still can’t be retrieved via the correct URL, then consider the common reasons for encountering page server errors found in the previous section of this guide.

Often the best way to fix a 404 error is to set up a permanent 301 redirect of the old URL to the new URL. You should also confirm that there is no malware on your site and that you have SSL configured properly.

Having malware or unwanted software on your website can create a negative experience for search engines and for users.

If search engines encounter malware on your website, they will be less likely to show your website in the organic search results. If users see a security error, they will likely leave your website in fear of contracting a virus.

That’s why it’s important to identify and remove malware and other unwanted software from your website.

Your SEO Site Audit will indicate whether malware was detected on your website.

Malware is any software designed to harm a user’s computer or device. The kinds of malware include viruses, spyware, worms, spam, Trojan horses, and more. This malware can be used by phishing sites to scrape user data and even capture their personal and credit card information.

Unwanted software is software that is deceptive or otherwise negatively affects a user’s experience on your website. Such types include software that redirects users to other websites without their consent or software that leaks a user’s personal information without consent.

To determine whether software or applications on your website are malware, read Google’s Unwanted Software Policy here.

Some of these guidelines include:

To fix malware or unwanted software issues on your site, ensure that your site follows the guidelines found in Google’s Unwanted Software Policy above.

Remove any software that goes against these guidelines or that were otherwise identified as malware in your Site Audit report.

The search results limit the number of characters that can be displayed for page titles. It’s considered best practice to optimize your page titles with the right length and keyword(s).

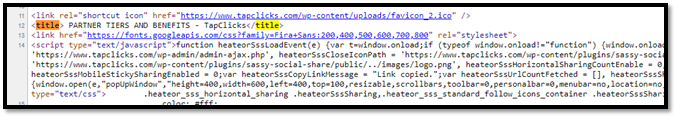

A title tag, otherwise known as your page title, is the title that appears in the search results. It is the HTML code that specifies the title of the page or post. Users can click on the page title directly from the search engine search results.

Titles give users and search engines context for what the page or post is about. It’s often what entices users to visit your website.

To increase your chances of ranking high in the search results, and of search engines showing the correct title for the page, you should follow page title SEO best practices.

Here are a few tips for optimizing your page titles:

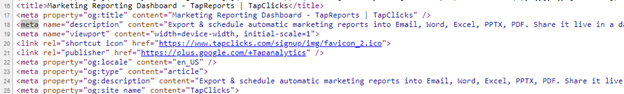

Meta descriptions gives users and search engine an indication of what your page or post is about. Since the search results limit the number of characters that can be displayed for meta descriptions, it’s considered best practice to optimize them with the right length and keyword(s).

The meta description is a section of text that appears in the search results to give users context as to what the page or post is about. It is an HTML element that summarizes the page’s content.

Having a great meta description can increase your chances of getting clicks from the search results.

To increase your chances of ranking high in the search results, and of search engines showing the correct description for the page, you should follow meta description SEO best practices.

Here are a few tips for optimizing your meta descriptions:

For a complete guide on how to write and optimize meta descriptions for better search engine rankings, check out this article on the Raven Tools blog.

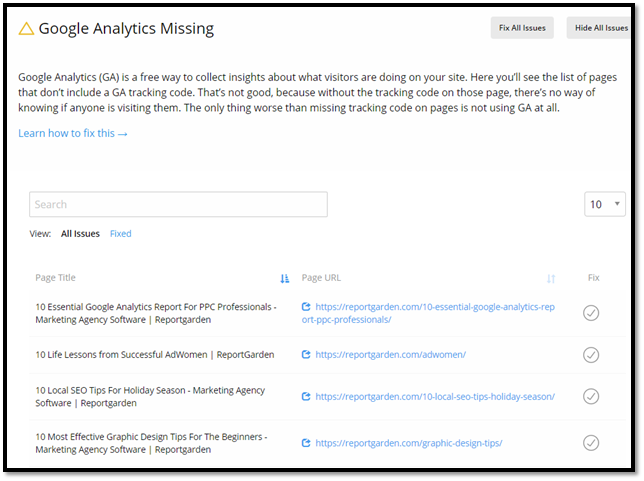

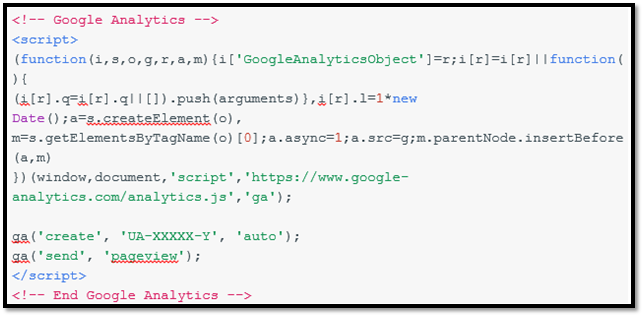

Any website can use Google Analytics code to track site usage, traffic, and visitor behavior. Pages that are missing Google Analytics tracking code don't record that activity.

Get all the data you need to measure site traffic by setting up Google Analytics.

Google Analytics is an analytics tool offered by Google that tracks traffic to your website. The tool is currently offered inside the Google Marketing Platform.

Website owners who want to see how many users are visiting their site and where that traffic is coming from should install Google Analytics to track and monitor these metrics.

Google Analytics is an invaluable tool for monitoring and measuring website activity. You can set up Google Analytics by visiting analytics.google.com

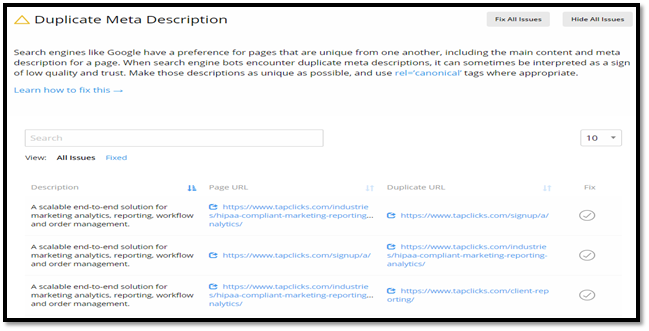

Search engines prefer it when your website has unique meta descriptions for each page.

Unique meta descriptions help their algorithms interpret your content and its quality. Search engines may ignore any pages with duplicate meta descriptions.

Meta descriptions give users and search engines a summary of what the page or post is about. Assuming you have unique pages on your website, you want unique meta descriptions to match.

If you have duplicate meta descriptions on different pages of your site, search engines will get confused as to what the content is about. This can hinder your search engine rankings

If a site audit determined that you have duplicate meta descriptions on your site, you will need to address this to improve your SEO.

Follow the steps below to find and fix duplicate meta description issues.

Fix duplicate meta descriptions to improve the SEO of your website.

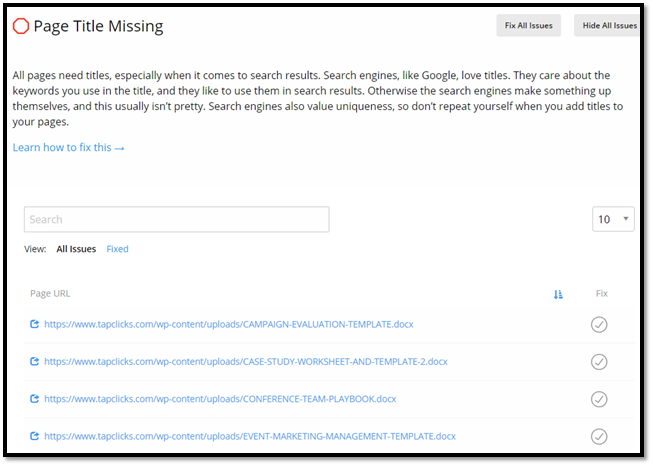

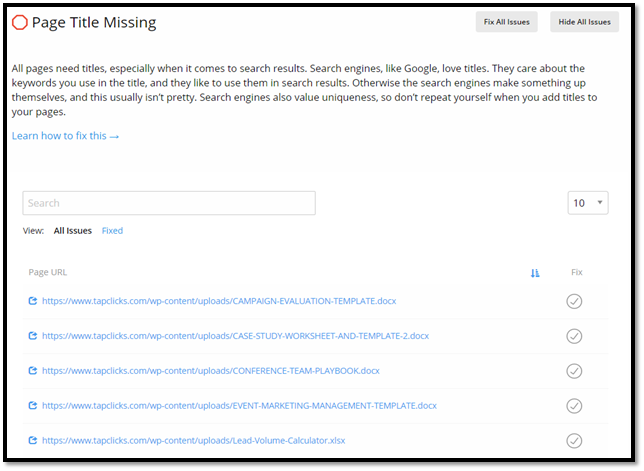

When it comes to SEO, it’s important that search engines and users know what your content is about.

One way to indicate this is by including a page title, otherwise known as the title tag.

Page titles are critical to giving searchers quick insight into the content of a result. It is a primary piece of information they use to decide which result to click on, so it's important to use high-quality, descriptive titles on your pages.

If your page or blog post is missing this key element, your website rankings and organic traffic may suffer.

The page title or title tag is considered to be one of the most important on-page SEO factors. However, a site audit may determine that one or more page titles are missing from your site.

This simply means that a title has not been specified for a given page. The HTML element that specifies the page title is missing.

Each page and post on your website should have a title tag.

To fix any “missing page title” errors, identify the URL that is missing the page title. Then, log into your website and include a new title tag.

Some best practices for writing optimized title tags are:

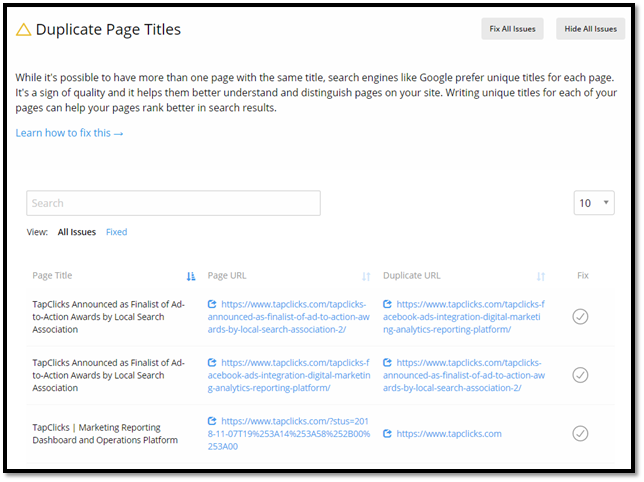

Each page should have a unique title tag in order to avoid duplicate title tag issues.

Page titles tell search engines and users what your page content is about. They are considered to be one of the most important on-page SEO elements.

That’s why it’s important that your page titles are optimized and unique to each page. This increases your website’s chance of ranking high in the search results and enticing users to click on the page.

A “duplicate page title” error comes up when there are two or more pages on your website that have the same page title.

This can be especially confusing to search engines, as they read that there are multiple pages on your site that have the same content. This indicates that your content may not be high quality and/or that your website provides a poor user experience.

Having duplicate page titles are bad for SEO in that search engines are less likely to rank your site if there are glaring content issues.

Each page and post on your website should have its own unique title tag.

To fix any “duplicate page title” errors, identify the URLs that have the duplicate page titles. Then, log into your website and write a new, unique page title for each page or post.

Some best practices for writing optimized title tags are:

Search engines prefer it when your website has a unique page title for each page. Identical page titles could confuse website visitors trying to navigate your site, as well as the algorithm trying to understand the page. Search engines may ignore any pages with the same titles.

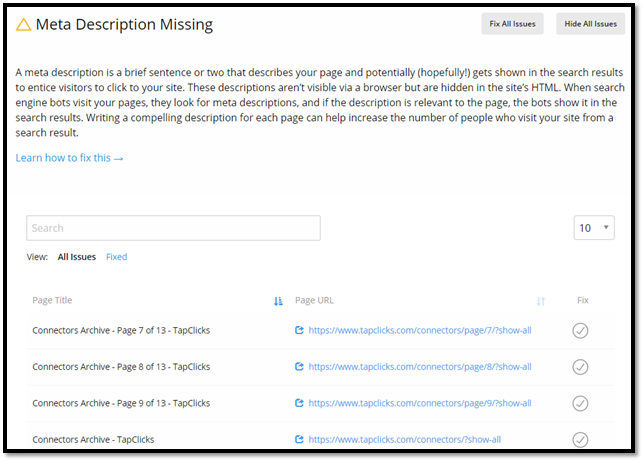

There are many on-page SEO factors that play a role in how your website ranks in search engines. It’s important that all of these elements are in place so that your website has a good chance of generating organic traffic.

A site audit will identify any SEO issues that may be holding your website back from ranking high in the search results. One such issue could be a missing meta description.

The description attribute (a.k.a. meta description) is a short, helpful summary of your page's content. It is a primary piece of information searchers use to decide which result to click on.

Having a description attribute doesn't guarantee that a search engine will use it in its search results, but in most cases it will. It’s important that every page and post on your website has an optimized meta description.

To fix any “missing meta description” errors, identify the URLs that are missing a description. Then, log into your website and write a new meta description for each page or post.

Some best practices for writing optimized meta descriptions are:

Be sure that every page or post has a meta description so that search engines are inclined to show your website in the search results and so that users are drawn to click on your page.

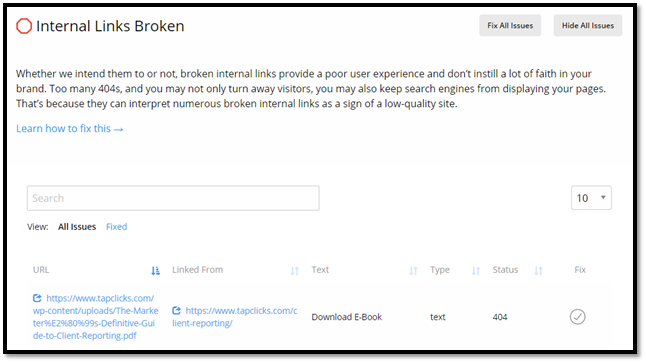

An internal link is a hyperlink that you purposely put in your content to lead to pages on your own website. If your internal links aren’t working, here are some possible reasons why and instructions on how to fix it:

To fix this, simply locate the correct page and update the hyperlink. Carefully scan the entire URL to see if you’ve accidentally typed characters that are easy to misplace (such as uppercase O and the number 0).

Look into getting a redirect for your website if you’ve changed or moved it. Since the old hyperlinks still point to the early domain, you’ll either manually adjust all the broken internal links or apply a 301 redirect, which will permanently redirect your old domain to your new one.

Check your site’s internal storage or media gallery if you’ve accidentally deleted the file. If it doesn’t show up, re-upload and replace the dead link. Alternatively, if you’re hosting your data on a third-party site for download and storage, check if it hasn’t been taken down.

This problem is common for landing pages that implement plugins to capture user information. It’s best to consult with your webmaster or IT department about getting a fix. If you aren’t knowledgeable in the way your site is coded, messing with it can cause a cascade of page failures.

There are two tools online to help you detect broken internal links: the easiest way to do this is the Google Crawl 404 search error console. Alternatively, always running a regular check for broken or dead links can drastically lower the chances of them appearing.

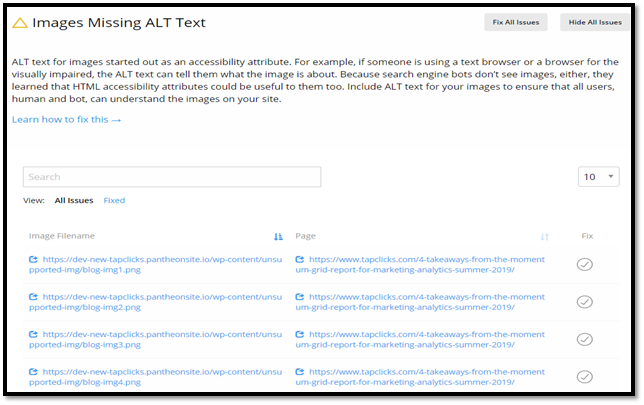

Website and search engines like Google rely heavily on alt texts (text that describes an image) to generate page ranking and relevance. If your pictures are missing an alt text, you’re missing out on a considerable chunk of SEO.

Alt text is an image’s way of letting search engines know what kind of content it is. Since web crawlers aren’t sophisticated enough to scan the image itself, they rely on alt text to categorize and rank it online. Alt text is useful for improving your website’s accessibility—especially when your visitor can’t see the image displayed. When your images don’t load, the space they occupy will instead contain the alt text.

There are two tools that you can use to test your site for missing alt texts: accessibility auditing and the slow network connection emulator. By running your website through these tools, you can immediately see which images of your website lack alt text.

Alternatively, you can also check your pages individually. Hover your mouse over the image and wait for the alt text to appear. If there isn’t any, you can go to the next step.

Go to your image gallery or media storage where your images are kept. Clicking or highlighting the image should bring up editing options. You’ll find a couple of options here that you can set like image size, alignment, and alt text. Simply fill in the alt text box, click update, and refresh your page to apply the changes.

You can also set the alt text as soon as you upload the image to your content, saving yourself valuable time. It’s good practice to always upload media with alt text.

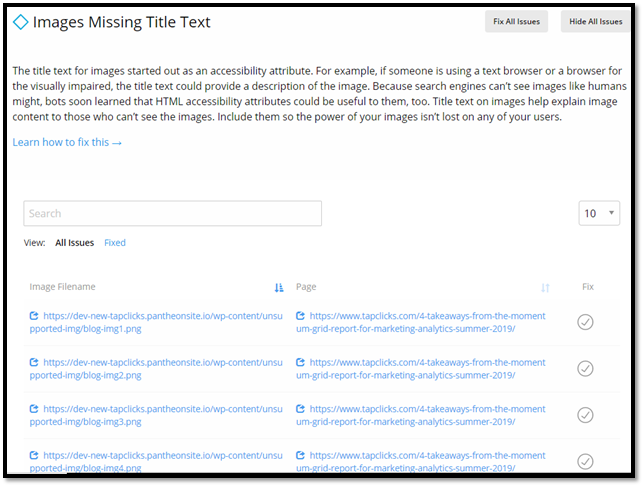

When you upload images to your website without a proper name, they're labeled by default with titles like image_00 or Untitled.jpg. But if you want to improve the ranking of these images (and their content), use title text whenever you can.

Running Google’s accessibility auditor can help you pinpoint problems with your images, particularly their titles and alt texts. You can also look into the media gallery of your website and check/replace the image titles individually and reapply the corrected hyperlink for good measure.

Go to your media storage or image gallery on your website. Bring up the editing options for your images, and you should find the one that allows you to update their titles. Some sites let you do this en masse, while others may require you to go through each image individually in order to change them.

First, you’ll want to be as descriptive yet concise as possible. Always avoid going with the default filename that the image has. Google can’t index names like IMG_00023.png correctly, as it won’t count as a relevant keyword or search term.

Name your images according to their content. For example, you can rename a picture of a pink donut as pink_frosted_donut.jpg. If you must, you can group specific images near the relevant keywords in the content. So for pictures about pink donuts, place them underneath an H2 tag talking about donuts and the color pink.

Doing this will not only help Google search and rank your page: it will also improve user experience and internal linking. Your site’s crawlers will also use image titles and alt text to display relevant results, and depending on your content, that could make or break your website.

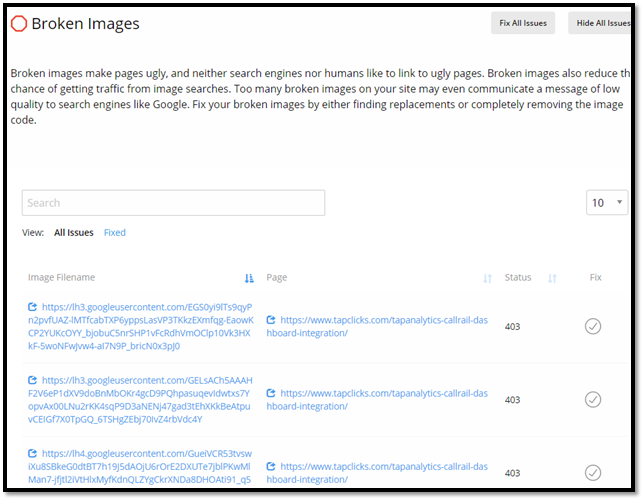

There are several reasons why your pictures failed to load:

If you’ve manually written out the file path for your images, check if you’ve written them correctly. File names are case-sensitive, and your website cannot compensate for any mistakes. They’re the most common cause of images that do not load on websites and are a relatively easy fix.

You may have misspelled the file’s extension rather than the name. If your file is in a format that isn’t specified by the file path, the image will not load even if the names are the same. Always check if your HTML specifies .jpg, .png, or .gif for image paths.

Sometimes the issue can be with the file itself. If your image has been removed or corrupted in the database, your image will not load. You can try deleting and re-uploading the image to your library, or reloading the page itself. Sometimes you may have just forgotten to upload the file—it’s an easy mistake to make, but thankfully an easy one to fix.

Alternatively, if you are hosting your images on a third-party website, their server status will affect your picture loading. If their servers aren’t up or they’ve removed your image, it won’t load in your website. Hosting your images on your servers or re-uploading them on the third-party website can fix this problem.

Finally, the issue may be with your servers or the database itself. Small setting changes can vastly affect the speed and quality of your images loading, and in the worse cases cause the process to crash. Looking at server logs can show you the source of the problem. Alternatively, if you’re hosting your images on a third-party site, moving them to your internal site database can bypass this problem.

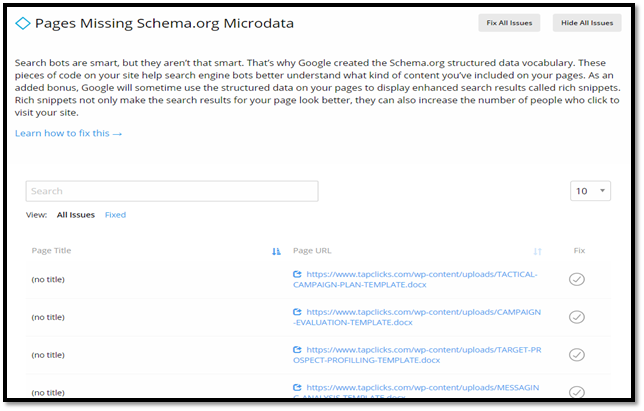

Before diving into this, it’s important to get three distinctions out of the way:

There are some fundamental basics that you need to fill out for your content to rank well. Meta descriptions, headers, image and alt text are the most prominent examples. They help search engines like Google to index your page, understand the content, and rank it accordingly once people input the specific search term.

However, a page using Schema.Org microdata has a further set of options used to display and rank their content. It does this by assigning values to the attributes in your content—a specific year, a personal citation, or mentioning a local place. This helps web crawlers fine-tune their results so you can target the audience you want to see.

Schema.org’s list of microdata can help you build a more coherent structured data markup by accurately labeling and describing your content in more detail. For example, you can add properties like titles, authors, ratings, reviews, the specific event, and date of publication to web pages.

This allows you to build your page to reach the audience you want, but it also helps search engines (which also write the rules for microdata) to pull and display the relevant information. Upon search, a page that uses Schema.org microdata looks more legitimate and efficiently provides crucial information.

All of this can help your page rank higher by telling web crawlers what exactly is the content inside. It refines the values so you control where you’ll pop up in search. There are also tools like RavenTool’s JavaScript Object Notation for Linked Data (JSON-LD) that can simplify Schema.org structured data integration.

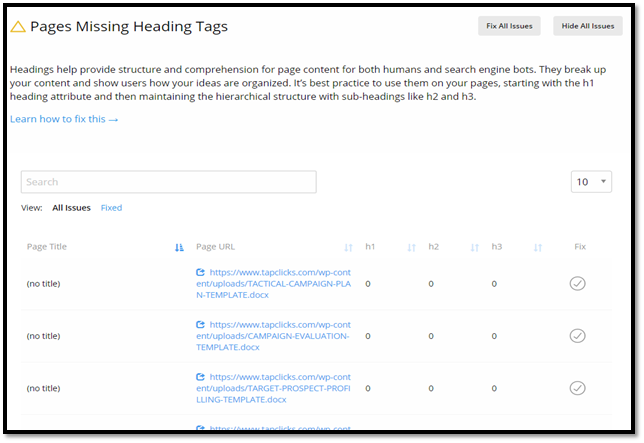

Headers are a valuable part of any website, as they often contain the first and most important thing a user sees. Virtually any kind of site can make use of a header, but it takes a little more effort to use it well.

Website headers are located at the top of your website; their primary function is to promote your content although they are useful for:

Website headers are also valuable SEO tools, using tags that can help search engines index and rank your page. They contain metadata and microdata that can give your users a better idea of what your site has to offer, and their visual placement naturally draws attention.

Sometimes a header isn’t required, like on sales and landing pages. You can forego your traditional website header by replacing it with your logo or something static and bring up the content higher on the page. It might be the best option if you’re running a simple website that needs to be responsive when viewed from different devices.

But if you need a header and you don’t have one, there’s no need to panic. Headers can be as simple as images or text, or as elaborate as embedded videos and lead capture forms. The best way to decide on what kind of header you need is to ask yourself how having one can help your website’s visibility and structure.

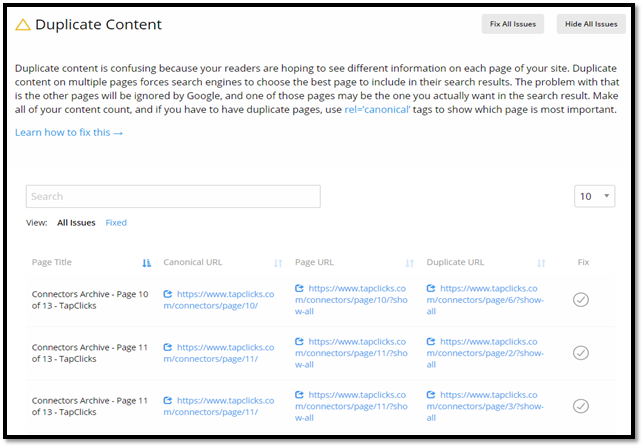

No one likes a copycat. And when it comes to content, search engines don’t like copies either.

Even if it’s unintentional, having duplicate content on your website is an SEO no-no. To increase your website’s chances of ranking in the search engine results, you will have to fix any duplicate content issues found during your site audit.

Duplicate content generally refers to blocks of content within your website (or on another domain) that completely match content elsewhere on your website or on another website.

This isn’t always done on purpose. For instance, some “non-malicious” duplicate content issues could be:

While search engines do a good job of choosing a version of the content to show in their search results, it's best practice to reduce or eliminate duplicate content as much as possible.

To fix any “duplicate content” errors, first identify the URLs that house duplicate content.

Then, you can follow the best practices below to remove duplicate content and prevent it from being an issue in the future.

As a hint, some SEOs have produced studies where you can get away with quite a bit of copying without falling into this penalty. A rule of thumb to follow is to reproduce no more than 25% of existing content unless you are creating location pages. In the case of location pages you can replicate your own pages without a penalty (most of the time).

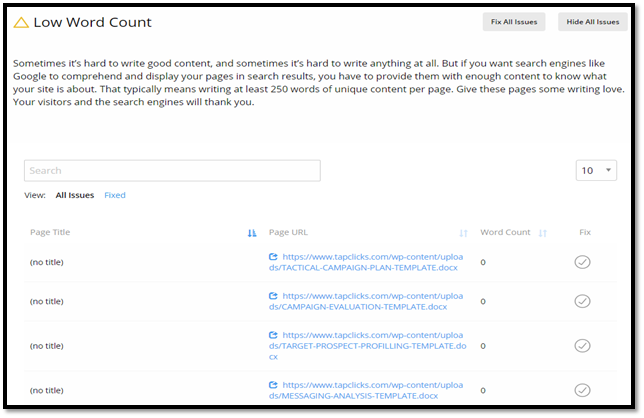

The best way to generate more organic traffic to your website is to give users and search engines what they want. That includes providing content that is informative, valuable, and search engine optimized.

Unfortunately, pages with a low word count may not perform well in search results. That’s because more words give search engine algorithms more context to understand the content and its quality.

SEO best practices suggest publishing content with more than 250 words.

While more content isn’t necessarily better (some studies show a point of diminishing returns), you at least want enough content to effectively cover the topic.

Users are looking for information that helps them solve a problem or find a service or product. If your content is long enough to describe the topic in detail, you have a good chance of drawing users in.

There are components that go into what is considered “great SEO content”. Here are a few best practices to follow:

Improve your SEO by fixing content that has a low word count and by optimizing your content for search engines and users. Keep in mind that google pulls from its index of content, which means that you need enough content on a page for Google to pull from to surface in its results.

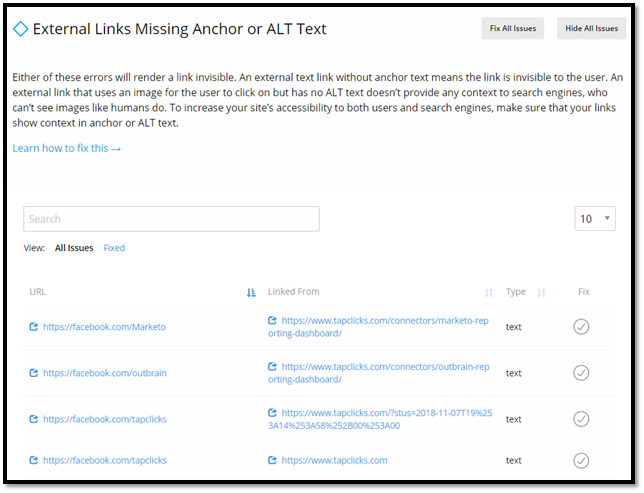

Linking out to high-quality sources within your content is a great way to provide additional value to your readers.

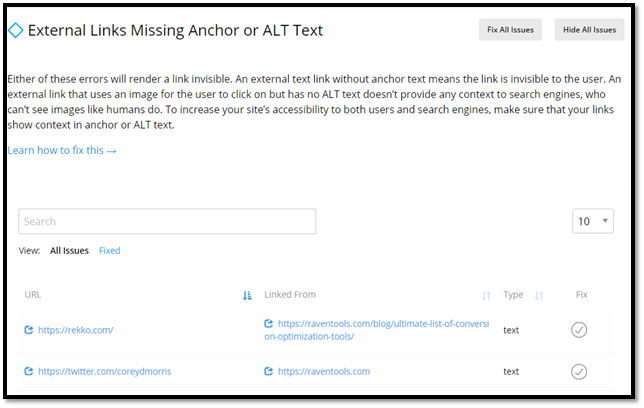

However, you’ll also want to make sure that the text used in your external links is optimized for search engines.

Anchor text is the text used when you link out to an external website. Including text for links helps search engines and users better understand the context of the page you're linking to.

If a text link doesn't have any text, it probably means the link is invisible to the user. If a link wraps around an image that doesn't have ALT text, it's the same as excluding text from a text link.

It’s best to include anchor text and ALT text so that users and search engines understand what content is being linked to. There are several types of anchor text, including:

Optimized anchor text will give users and search engines an indication of what the external page is about. It may or may not include an SEO keyword, if fitting. It’s better to use anchor text that reads naturally versus including a bunch of keywords or repeatedly says “Click here!”

Best practices include making sure the anchor text is relevant and using different types of anchor text throughout the page.

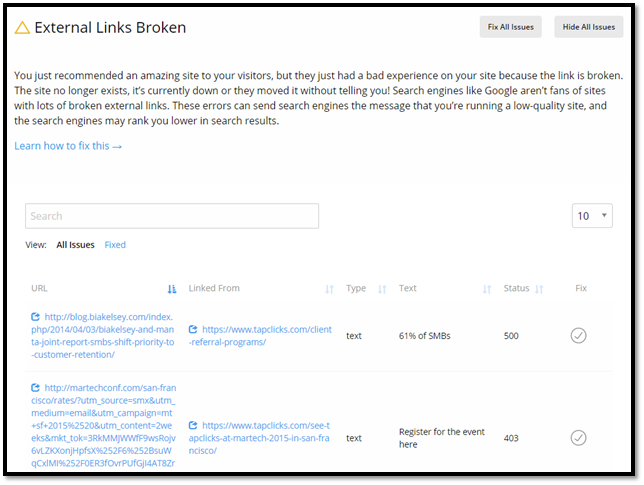

Often you will link out to other websites and sources within your content in order to provide more value to your readers. When you link to reputable websites, this offers your readers additional information about your topic.

However, sometimes this link can come up as “broken”, hindering the user’s experience (and harming your SEO).

An external link is a link that links from your website to another website or source. When external links are broken, it means that links that point to other websites cannot find the destination page (they receive a 404-page error or server error).

If search engine bots find too many broken external links, they may trigger a "low quality" site signal to a search engine's algorithm, resulting in poor search result performance.

Use the Site Auditor tool from Raven Tools to identify any broken external links on your site.

If the source or website no longer exists, you can either remove the link or link to another source. You may also choose to link to a different page on your own website.

Sometimes the outside source still exists, but the URL changed. To figure out if that’s the case, do a Google search for the original source. Google may bring up the new page/URL, and then you can change the old external link to the new URL.

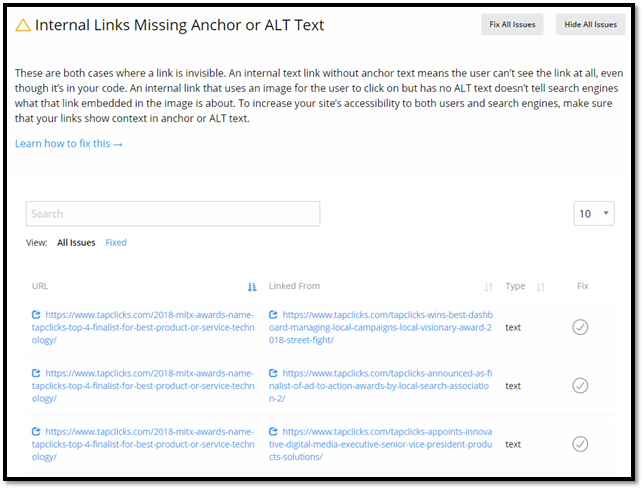

Oftentimes your content will include links to other pages on your website. This is a great way to provide additional value to users and direct them to additional resources on your site. It also makes it easier for search engines to crawl your website.

However, optimizing your internal links doesn’t stop there. You also want to be sure that your internal links use effective anchor text.

Internal link anchor text is the link text used when linking from one page of your website to another. Including text for internal links helps search engines understand the context of the page you're linking to. For example, your anchor text may be something like “Check it out” or “See our latest article”.

If a text link doesn't have any text, the link is likely invisible to the user.

It’s best to include anchor text and ALT text so that users and search engines understand what content is being linked to. This will improve the user experience and make it easier for search engines to crawl and understand your website content.

There are several types of anchor text, including:

Internal linking best practices include making sure the anchor text is relevant to the page and using different types of anchor text throughout the page.

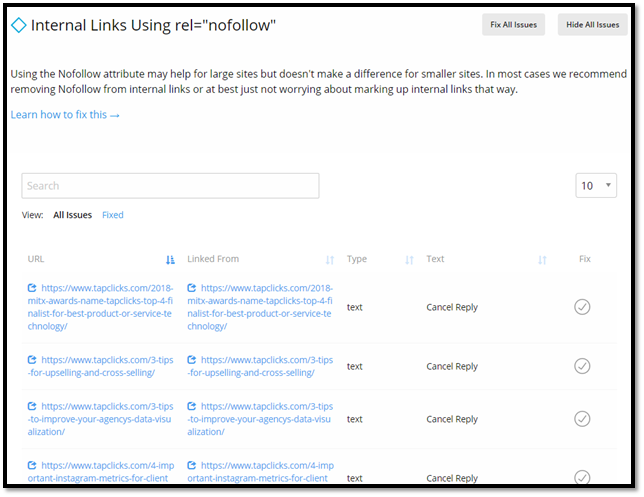

While including internal links to other pages on your site is a way to provide additional value to users, you can run into issues if you use a rel=”nofollow” attribute.

What is rel="nofollow" and what does it matter to your website SEO? Learn more below.

The nofollow attribute is used to annotate a link in order to tell search engines "I can't or don't want to vouch for this link." In Google, links using the nofollow attribute also don't pass PageRank and don't pass anchor text.

It's considered a best practice to not use the nofollow attribute for internal links because you're essentially telling search engines to not trust parts of your site.

To remove rel="nofollow" from your internal links, use the Site Auditor tool from Raven Tools to find any internal links that use this attribute.

Then, remove the attribute to ensure that the link is “followed”. This shows search engines that you can vouch for the source – as it is on your own website, after all.

Including external links to outside sources and websites is a great way to provide additional value to your readers. Of course, you want to be sure that those sites are high-quality and that linking to them does not harm your own website SEO.

The rel=”nofollow” attribute can be used to prevent passing your own PageRank to an outside website – but do you always want to do this?

Learn what rel="nofollow" is and why it matters to your website SEO below.

The nofollow attribute is used to annotate a link in order to tell search engines "I can't or don't want to vouch for this link." In Google, links using the nofollow attribute also don't pass PageRank and don't pass anchor text.

Sometimes you will want to use rel=”nofollow” to prevent passing your own site authority to an outside website. This is typically the case if the website is a competitor’s website or if the website has low authority.

However, this can be questionable to search engines as to why you would link to an outside site that you will not vouch for. Therefore, use rel=”nofollow” sparingly and always consider how doing so or not doing so may affect your own site SEO.

To remove rel="nofollow" from your external links, use the Site Auditor tool from Raven Tools to find any external links that use this attribute.

Then, remove the attribute to ensure that the link is “followed”. This shows search engines that you don’t mind vouching for this source.