Mention search engines to anyone these days and chances are the first thing they’re going to think of is Google, and rightly so; Google does a great job. However, other search engines do exist, such as Yahoo!, Ask and Bing — just to name but a few. As SEO specialists, it’s important to understand how most, if not all, search engines work. In fact, it’s crucial that SEO specialists understand the how search engines collect data, rank websites and return results.

Search Engine Basics

As originally stated in Danny Sullivan’s article, How Search Engines Work, every search engine or directory uses the following ways to collect data:

- Crawler-Based Search Engines use a spider, also known as a crawler or a bot

- Directories, like DMOZ or Wikipedia, use human beings to populate and control their data

- Hybrid Search Engines or Mixed Results Search Engines use a combination of automated and directory based results

If you search for anything in your favorite crawler based search engine, almost instantly you will be presented with results that the engine has collected. The results will be the search engine’s best guess at judging what you wanted to find, based on what your search phrase was. However, search engines do make mistakes and irrelevant pages will be listed, but with a little patience, you’ll eventually find what you’re looking for. Marcus Pitts, an SEO Agency pro and Google guru, wrote a really solid post that covers one of the important parts of SEO basics – Algo updates. I recommend you check it out afterwords!

So, just how do search engines determine relevancy? Well, that’s a closely guarded secret, but we can make a general assumption that all major search engines follow the general rules listed below:

Search Engine Ranking

Follow a Standard

Complying with web standards and building your site using XHTML and CSS can benefit your web site and and your SEO campaign two-fold. You can give the pages on your web site greater visibility amongst search engines, and the structural information that exists in compliant documents makes it easy for search engines to access and evaluate the information it finds.

Standards exist so that even old browsers will understand the basic structure of your documents. However, sometimes they can’t understand the latest and greatest additions to the standards, but this is fine, because they’ll still be able to display the content of your site. This also applies to search engine robots; the systems that collect information from your site.

Location + Frequency = Good Keyword Placement

As any good SEO Specialist knows, the location and frequency of keywords on a Web page can have a significant influence on how your site ranks. Your page title (located in the HEAD area) is one place that shouldn’t be ignored. Think of a newspaper (remember them?), and how the headline is the first piece of information that grabs your attention. Well, it works similarly with search engines, except that search engines are more concerned with the keywords used in the title, and less with how clever it is. Pages with a lower search engine ranking often make the mistake of not putting target keywords in the title tag.

Another good idea is to include target keywords in header elements, like H1 or H2. Since these elements are used as headlines and are also usually near the top of the content, search engines will stress a greater importance upon them than keywords that are in paragraphs or near the bottom of a long page.

Search Engine Placement

Build it and they will come

Using link analysis is part of every major search engine’s algorithm. It’s pretty hard to fake-out a search engine. If it were that easy you would just submit your sites to Digg, del.icio.us and reddit and be done with it (commonly known as link seeding). However, the number of inbound links is only one factor that search engines consider. The quality of inbound links is actually much more important. Lets say you’re selling shoes and would like to rank highly for a certain search term containing the word shoes. Receiving an inbound link from a clothing store would be good, but receiving a link from a site that specializes in just shoes would be far more beneficial for SEO purposes — especially if that website receives a lot of traffic, has a high PageRank and is frequently discussed and linked to from other websites. Inbound links from sites that are seen as authority websites, and which also have high relevancy to the destination site, will almost always result in improved search engine result pages (SERPs) for the destination site.

I don’t like spam!

No one likes spam (or a spammer) and that includes search engines. Continually spamming search engines could result in your site being penalized or even banned from search engine listings. If you are spamming search engines (repeatedly using target words on a site when its not relative) then you’re fighting a losing battle. It can be a time consuming process that may not succeed and may result in severe penalties. Your time would be much better suited to focus your energy on more ethical SEO practices such as creating relevant content and building up a network amongst your online peers. Consider carefully when you submit to online directories and do research before you do so. If you submit your site to a directory that is seen as spam by a search engine, then it could have a detrimental effect on the target website. Should the site get penalized, then it will reflect badly upon you.

Lost? Use a Map

After you’ve submitted your main index page to a search engine, it would be wise to create an XML sitemap. XML sitemaps are your way of telling search engines about other information that wouldn’t be available to them had you not done otherwise; such as which page you give the most importance to, which page gets updated most frequently, and which page you want search engine bots to crawl more. Creating a visual HTML-based sitemap can also be beneficial — not only does it give your users a great place to look at all of your pages, the search engines will also crawl through each one of those internal links, collecting data on every page of the website. You can find a list of free sitemap tools at XML Site Map Tools.

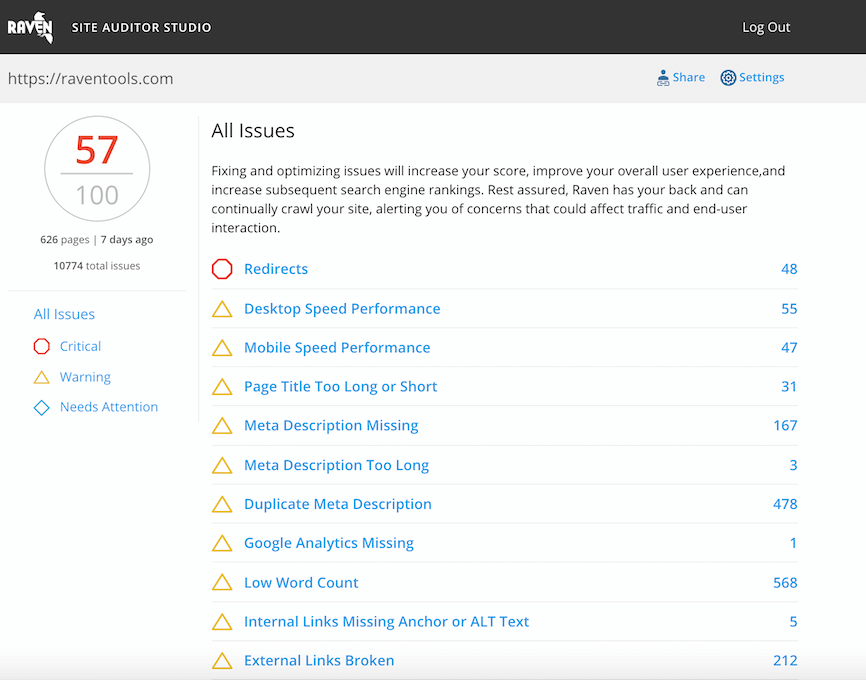

Analyze over 20 different technical SEO issues and create to-do lists for your team while sending error reports to your client.