After 13 years in SEO, I’ve seen a lot of websites.

After a while, one starts to notice trends – the same basic SEO errors repeated over and over.

Most of these are easy to find and fix – the kinds of things that any decent SEO should be aware of. The kinds of things that would be found in a 10-minute “site clinic” session at a search conference.

If you have a site and don’t have an SEO, these are a few of the things you can quickly go and check yourself. If you do have an SEO, they had better have brought any of these issues to your attention. If they haven’t (and sadly, I see a lot of clients who have had “SEOs” for years but still have these problems), you may want to question if you have the right SEO working for you.

Here are 10 of the most common SEO mistakes.

1. Duplicate versions of the home page

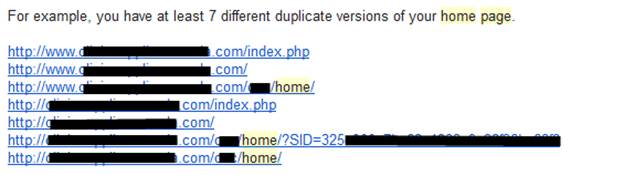

One of the first things I check with a site is whether there are duplicate versions of the home page.

Check out the below from one of my clients. So far we have found seven different versions of the home page, all accessible to the crawlers and with no canonical tag on the pages.

If you are serving duplicate versions of your home page to the engines, they are duplicate content. The engines will usually figure out which version to index, but sometimes they get it wrong – and why make them think if you don’t have to?

Another issue: If you have all your internal links pointing at example.com/default.aspx or example.com and the “real” version of the page is www.example.com, you’re splitting the link equity between one or more URLs. You want all that linky goodness pointed at the one URL you want to rank in the engines.

One of the easiest ways to find duplicate versions is to click on the logo in your site header. Does it go to the canonical (official) version of your home page? That is, if your home page is “example.com,” is that where the link from the logo actually leads? At least 50% of the sites I audit end up at something like “example.com/default.aspx” or “example.com/index.php.”

Another way to look for this is to check to ensure “www.example.com” and “example.com” don’t both resolve in the browser. Whichever version you do not want to serve to users should 301 redirect* to the one you do want. A great place to check whether a URL is redirecting correctly is URIValet.com. Just enter the URL and it will tell you if it’s 200 (normal page), 301 (permanent redirect), 302 (temporary redirect), etc.

How do you fix it? The best solution is to 301 redirect all non-canonical versions of the home page URL to the official version you want to show to users and to rank in the SERPS. If you can’t do that, the next best solution is to at least make sure the duplicate version of the URL have the canonical tag on them pointing to the canonical or official version of the URL.

2. Un-optimized title tags

This should be a no-brainer, but I see a lot of sites where the title tag is something like “Home,” “Home Page,” “Brand Name” or some combination of those. One recent site I audited simply had the brand name, twice, as the title tag.

The title tag is one of the most important on-page elements for SEO. Not only does it help you rank, but it’s what potential users and customers see in the SERP when they see your listing.

Unless you want to rank for the term “home page,” make sure the title has something meaningful in it – ideally including one of your best keywords near the start of the tag.

3. Duplicate title tags

It’s great if you have an optimized title tag for your home page, but please make sure it’s not the only title tag you have on your site. Yes, it sounds silly but I’ve seen plenty of smaller sites with the exact same title tag on EVERY. SINGLE. PAGE. Unless there’s only one keyword you ever want to rank for, and you only want the engines to index one page on your site, make sure you don’t have a ton of duplicates.

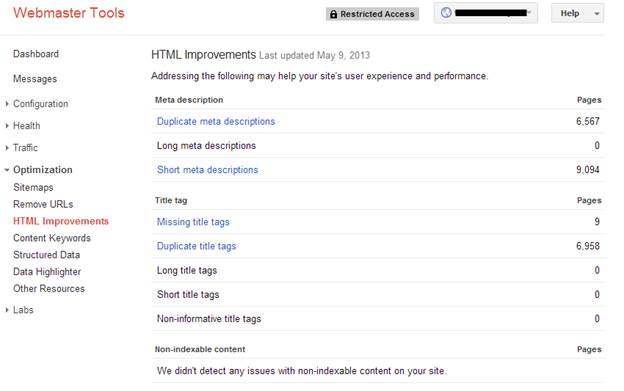

It’s easy to check for duplicate titles. The easiest way is to check Google Webmaster Tools (or Bing Webmaster Tools). If you don’t have these installed, make it the first thing you do after reading this! In Google WMT it is easy to check, just go to Optimization > HTML Improvements.

You can click on the links to see which tags are duplicated and which pages they are on.

Two other great tools I like to use to diagnose this are the Moz Crawl Diagnostics Summary and the desktop app Screaming Frog.

Another common problem you can diagnose through looking at duplicate titles is duplicate content. Frequently if pages have the same title, they might have the same content, too. Click through on the links for the pages with the same titles to see the pages.

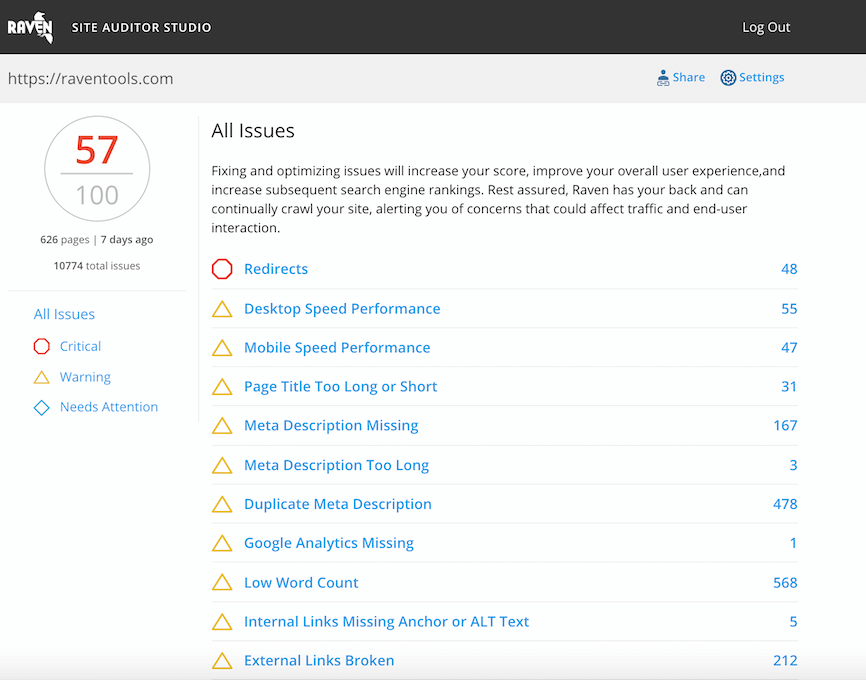

(Editor’s Note: Raven customers can check for duplicate titles, duplicate content and much more in Site Auditor).

4. Duplicate content

Almost every site I’ve ever worked on or for has had duplicate content problems. They’re especially common with newer sites that are built as “platforms,” when using out-of-the-box CMS systems, or when an SEO has not been involved in the architecture phase of building a site.

Finding and fixing duplicate content can be one of the most challenging parts of auditing a site. Here are a couple of simple ways to tell if you might have a problem.

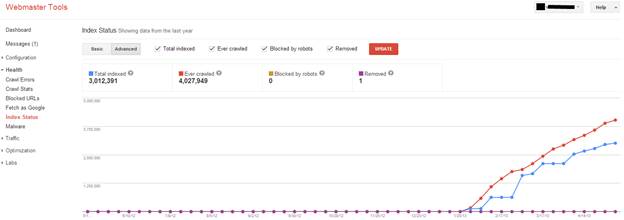

A simple way to check is in Google Webmaster Tools (if you are sure your XML sitemap is complete and accurate).

Check out the site below, for example. According to the sitemap, it’s telling Google it has about 157K pages. (You can find this in Optimization > Sitemaps).

However, Google has indexed a little over 3 million pages.

Either the sitemap is incomplete or there’s a lot of duplicate content. In this case, it was a whole lot of duplicate content. (By the way, 3 million was just the pages Google hadn’t already excluded for being duplicates – the site actually had about 8 million URLs when all the duplicates were added up.)

5. 404 errors

With 404 errors, the problem can swing in one of two directions: Too many or not enough.

Too many 404 errors can tell the engines there is a problem with the overall quality of the site. It’s especially troubling when pages that have good external links pointing to them result in a 404 (this often happens with site redesigns, discontinued product categories, etc.)

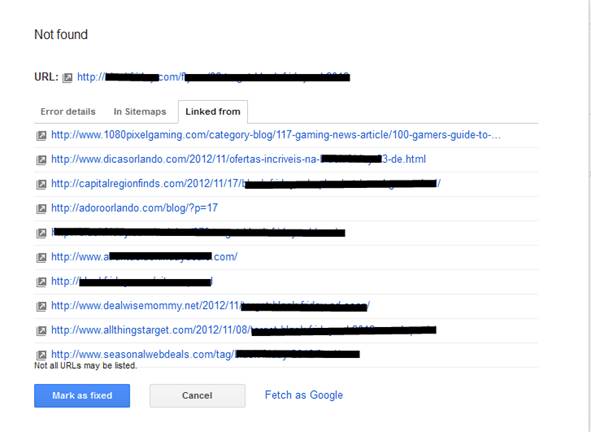

For instance, here’s a 404 page I found with at least 7 good external links pointed at it.

You are flushing your hard-earned link equity down the toilet if you let these pages go uncorrected. The easiest place to look for them is in Google WMT under Health > Crawl Errors > Not Found.

The other common problem with 404s is that you don’t have enough of them. Returning a 301 redirect for a page or URL that has never actually existed on your site (as far as the engines know) is called a soft 404. This happens when there are broad 301 redirects created to catch wide swaths of redirects rather than coding them on a more granular basis. It’s a signal of poor quality. You can find these in Google WMT under Health > Crawl Errors > Soft 404

As an aside, it makes sense to allow some pages to 404 even when they have existed on your site. It used to be common practice to redirect every single discontinued product page back to a category page or the home page. But if a product hasn’t been around for long, has no links or social shares, and is a fleeting, ephemeral page, it’s fine to let it 404 and tell the engines that “this page used to exist but now it’s gone for good so you can ignore it.”

6. No deep links from the home page

Your home page is going to naturally get the most link equity on your site. Text links in the body of your home page are the best way to flow that link equity through your site to your main product, category, or content pages.

Splash pages or home pages that are all graphics and navigation are pretty for users, but they don’t get the job done for passing link equity. Find a way to get text links from your highest link equity pages to your most important pages.

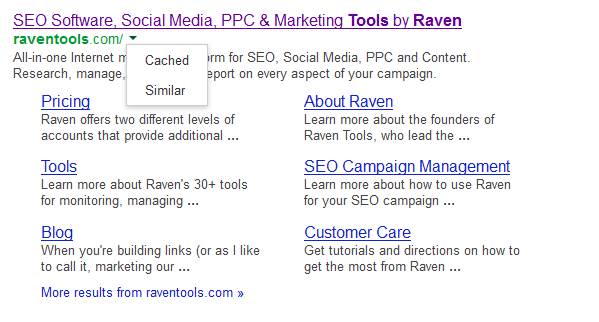

A quick way to check this is to look at the Google text cache for your page. Go to your listing in the SERP, click the drop down arrow beside it (Google recently moved this), and click on Cached

Then click on Text-only Version.

Look at the page. Can you see links to all your most important pages and site sections?

7. Too many links from your home page

Link equity flows like water through a site. You want to channel it and control it.

Having hundreds of links on your home page is like dumping that water in a colander. It’ll flow, but each channel is only going to get a tiny share. You can check for too many home page links the same way you looked at the text-cache for too few links. The aforementioned Moz Crawl Diagnostics Summary will also give you a list of pages with “too many” links.

8. Un-crawlable site

If you have content you don’t link to or that the engines can’t crawl, your site you obviously have a problem.

One way to discover this is the inverse of the duplicate content check. If you have 100K pages in your sitemap and Google has indexed 10 pages, you likely have an issue where the site is not crawlable.

If you don’t trust your sitemap, crawl the site with a tool like Screaming Frog. It won’t be stopped by instructions in your robots.txt file and will crawl all the pages it can find. If there is a big discrepancy in the number of pages, you might have crawling issues.

Of course another quick way to check how many pages Google has indexed is the good old “Site:” command in Google.

9. Unreasonable SEO expectations

SEO isn’t easy, fast, or cheap. Gone are the days of scalable, easy tactics that exploited loopholes in the algorithms.

If you want to rank highly now, you have to deserve it. Many site owners think that SEO can make up for the fact that the site is not exceptional, but that’s just not the case.

Take a good hard look at your site and your business. If you truly deserve to rank well (and drive organic traffic), SEO can help you get there.

But if your UI sucks, your design is dated, your prices are too high, your content is self-centered and boring or your customer service is lousy, fix that stuff first. Then do SEO stuff to get you where you should be.

In the old days you could be successful at SEO and generate the money to make your site great. Now you have to spend the time and money and resources to be great, and then you can be successful at SEO.

10. Hiring a crappy SEO

I hate to say it, but this is still one of the most common SEO mistakes I see business owners making.

Whether it’s because you don’t want to (or can’t) allocate the resources or because your SEO agency/consultant is lazy, incompetent, out-of-date, or just taking you for a ride, hiring an SEO that isn’t on his or her game is the most dangerous SEO mistake of all.

Hiring a crappy SEO used to be just a waste of money at worst, but now they can bury you. I’m not talking black-hat stuff here either. Even in my short time as a full-time consultant (after many years in-house) I’ve talked to site owners who have SEOs who:

- Missed many of the items above

- Built large numbers of crappy, money keyword anchor text focused links from spammy blog networks and directories (Ever heard of Penguin?)

- Claim to have built links that they couldn’t show the client because it would give away their “secrets”

What can you do?

If you are a site owner, you owe it yourself to know enough to do some simple checking on your own site. Learn a bit about how to check the links your SEO is building for you. Get a second opinion. No decent SEO should be scared of another SEO reviewing what they do. If you don’t feel comfortable doing it yourself, you can always find a good SEO reseller that does all the work while making you look great.

At the very least, make sure you have Google and Bing Webmaster Tools and Google Analytics set up. If you find something that looks hinky, ask your SEO or find someone who can help you out.

Don’t let these common mistakes kill your SEO efforts.

Analyze over 20 different technical SEO issues and create to-do lists for your team while sending error reports to your client.

your #6 tip; no deep links from home page is spot on. I add a layer of tier 2 socials to get multiple SERPs on page 1 of Google for a longtail as in “is social shares the new seo” where 4+ serps have my name on it 😉

Thanks Neil! You make a good point. I’m seeing more and more evidence lately of social shares actually moving rankings. I haven’t tested it rigorously yet but I’ve seen some anecdotal evidence.

Fantastic post Rob! The one I am seeing more than any right now is being hrt by automated incorrect canonicals. One furniture inventory site with thousands of products and not one of their pages in SERPs. Why? Because their rel=canonical tag was the same on every page. Just one line can cause a boat load of problems.

That is an ugly one. I had one recently where the canonical tag URL was just what ever the URL was in the browser. Every page just canonicaled back to itself rather than the canonical version. Seeing WP issues as well with other plugins messing up the WordPress SEO plugin and causing canonical tags with relative URLs rather than absolute.

Funny you mention that Carlos… I am working with some sites right now too, and without the rel=canonical tag set correctly we would have run into major duplication issues. That tag is so important, even if you have valuable information that has to be shared across multiple sites. A lot of people overlook that.

These look all too familiar. 3 million pages indexed is a total nightmare.

Regarding #5 404 errors. I like to use Opensite Explorer > Top Pages > CSV Export filtered by HTTP Status to find pages with inbound links resulting in 404 errors.

Nice tip! Glad you liked the post 🙂

#9 – if more SEO’s told clients this it w

Totally worthy Post… Actually i am finding that kind of research since long.. finally i have got it… Thank you so much….

I am looking for that kind of research since long.. And finally get it.. Thank you so much..

I found your blog via michaeldorausch.com. I am developing a concept and brushing up on seo skills — the landscape has changed a lot. Thanks for the great information I picked up today in this article as well as on your article regarding seo and comments. I also appreciate the commenters providing useful insights about deep links and open site explorer. I will definitely dig for more info and check out the recommended tools.

Question:

Our business hired a company to do our SEO. With a limited number of words to optimize and in a competitive industry, we focused all of the keywords on the few products that will sell the most, thus, leaving some product pages without words to optimize. Is it okay if we use the same general meta tags on these pages as our homepage? If not, what would you recommend? I’ve only been involved with SEO for about 3 weeks so my knowledge is very limited.

Logan, you definitely don’t want to use the same metas on other pages. This can cause issues when it comes time for search engines to decide which page to rank for a particular keyphrase. Additionally you don’t want your meta data to be misleading to searchers. If you don’t want to optimize those pages, or just don’t have the budget to, that is fine, but I would advise at least making the meta data unique to each page, regardless of how “optimized” they are.

Thanks for the update on SEO mistakes. SEO is confusing to me and with all of the changes in Google it’s even more so.

What is good this week might not work next week or next month. I’m curious, how many links is too many links? Does anyone know?

Maybe Matt Cuts could tell us. I would be interested to know

Read more: SEO Mistakes That Even Professionals Make Without Realizing